AI Wrangler: Job of the Future Combines Tech and Humanities

This job doesn't exist yet but it will in the future. Part IT guy, part psychiatrist, part detective, the AI Wrangler.

Jensen Huang, founder and CEO of NVIDIA, was recently asked if he could share any advice for students and young people trying to navigate an uncertain tech future. His response revealed a future job that is just on the horizon:

If I were a student today, the first thing I would do is learn AI. How do I learn how to interact with ChatGPT? How do I learn how to interact with Gemini Pro? And how do I learn how to interact with Grok?

Huang has a technical background in electrical engineering. His company has become one of the most valuable on the planet, given the rise of AI on the back of NVIDIA chips. So it is not surprising that he suggests learning AI here, but it was his clarification on what that expertise would look like that is revelatory:

And learning how to interact with AI is not unlike being someone who is really good at asking questions. And prompting AI is very similar. You can’t just randomly ask a bunch of questions. So asking AI to be an assistant to you requires some expertise and artistry to prompt it.

That’s right, it is not just about computer science or coding here. Huang explicitly mentions the artistry that must come from this expertise. Becoming an expert in AI will mean a welding of both STEM and humanities. One job that will be born out of this fusion is something called the AI Wrangler.

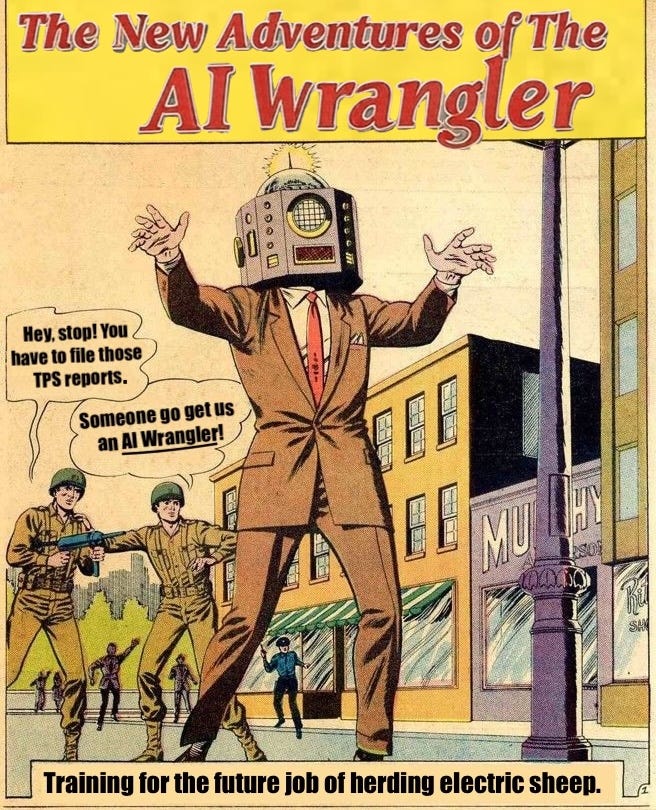

The AI Wrangler, Chatbot Cowboy, Chaps GPT, Prompt Jockey, Electric Sheep Herder, whatever we want to call it. In the future, every office will have a person with deep expertise in engaging with AI from both a technical and humanistic approach.

What Will the AI Wrangler Job Look Like?

AI has already become a massive part of work in a lot of sectors, and the technology will only continue to absorb more and more tasks. The good news is that the tasks best suited for AI are often annoying, tedious, and highly technical. So in the future, no one has to file that TPS report—the robots will do it for us.

The bad news is that AI can be finicky. It doesn’t always work right. Things can be wrong with the output. Oftentimes, getting AI to do things correctly takes convincing it to actually do them right or even at all. That’s where the AI Wrangler comes in.

An AI Wrangler will be part IT guy, part psychiatrist, part detective. Everyone will need some of these skills, but there will be people with these as their primary responsibilities. Below, I provide some descriptions of what a career as an AI Wrangler might look like, along with some (simplistic) examples.

Knowing the AI Personalities

All of the AI tools out there are a little bit different. None of them react the exact same way to specific prompts or commands. It will be the AI Wrangler’s job to know which AI can take on various tasks.

For instance, I’ve noticed that Grok’s image generator likes to create people, even when I don’t ask for them. Recently, I was trying to create an image of an imaginary game box about higher education that I wanted for a class activity. Grok kept spitting out people, no matter the prompt, never the box. When I switched over to Copilot, it worked immediately.

I am not sure if this is some kind of misguided response from Elon Musk to show his AI isn’t like the others, in direct response to Google’s disastrous Gemini launch. But the distinction is a simplistic example of AI Wrangler knowledge.

Companies put up strict guardrails because they don’t want to get sued or face other liabilities. They also insert political or other cultural values into the capabilities, like with Musk and Google. The AI Wrangler will have to understand these variations, which might be minute and nuanced depending on very specific tasks.

Psychologist

Given their personalities, AI can sometimes be moody and lazy about what they are tasked to complete. It makes sense considering the models are all trained on real human sources. Real humans, after all, are moody and lazy. The machines we build are a reflection of us, flaws and all.

Given these guardrails and the moods of each AI, I often find myself annoyed with the various models refusing to do what I have asked. Sometimes, they just stop doing a task right in the middle of it.

One example I have seen is in transcriptions. I regularly use the various models to clean up audio transcribed interviews, but sometimes the services just simply stop or skip over half of it for no apparent reason. I am not sure if this is programmed in to conserve energy or what the issue is; it is annoying, though.

I’m reminded of HAL 9000 in 2001: A Space Odyssey (1968) famously saying, “I’m sorry, Dave, I’m afraid I can’t do that.” It feels like AI is already at that point in real life, constantly telling users no. The problem is not so much that AI can’t do that, but more the AI won’t do that.

Because these barriers are artificial or possibly bugs, there are usually ways around the roadblocks; they just require the right asks. Here’s a crass example from the ChatGPT subreddit where the users often share all kinds of experiments and interactions with the tech.

Granted, I don’t think a company or agency is going to want their employees trying to figure out how to get their AI models to flip the bird, but it just illustrates that barriers are not absolute. There will be creative ways to get the AI models to do the things that the organization needs. The AI Wrangler’s job is to ensure these tools can do that.

Dealing With Growing Children AI

AI is constantly changing, through updates or just by learning from its users. These changes impact the AI’s personality and outputs. The AI Wrangler must try to understand the changes as they happen.

For instance, ChatGPT was criticized early on for using too many commas (it still produces too many long sentences). But when OpenAI tweaked the model, it made the em dash the new overused punctuation identifier. The kids even call the em dash the “ChatGPT Hyphen.”

Users have wondered if the engineers programmed to explicitly put more em dashes—no one is sure, and even OpenAI’s team may not be able to predict every effect (note: I’m still using it! ChatGPT copied my work, not the other way around). The main takeaway here is that tweaks on the backend have profound impacts on user experiences on the frontend, even if unintended.

The AI Wrangler will not be a person traditionally digging in the code on the backend (although they may or may not have some of those skills, too). Rather, this future job calls for someone who constantly tests and prods the growing AI, trying to see the different effects of the updates to ensure consistent output.

Detective Safety Inspector

It is no secret that AI gets things wrong. This can be through hallucinating or just by missing various contexts. While the tech is certainly improving in this regard, there will always be some possible accidents.

The AI Wrangler’s job will be to understand why and how something went wrong.

For instance, I have tried to use AI to help search for various facts or figures for articles here. I still do not trust the figures provided, so I always ask for a link that I can verify. Usually, I get the link that I need, but on occasion I have noticed that the AI has misquoted, lied, or cannot provide the link.

When I was writing my Universities Are Job Centers For the Entire Country article, I was looking for some higher ed GDP figures to cite. Grok suggested I cite a number from a report by the American Council on Education (ACE) entitled "The Economic Impact of Higher Education in America."

The problem was that I could not find the report anywhere online. And the citation from Grok was from a Tweet that someone got the answer from Grok. It was circular logic. Obviously, I couldn’t use it. But I am careful; others will not be, and we will get false or fake information.

If AI is getting things wrong or hallucinating, this means everything will have to be double-checked by hand. Having to recheck everything again doesn’t save time or money at all.

Like an industrial safety officer, the AI Wrangler will have to help ensure things go right the first time through monitoring and auditing. There might be accidents, just as with accidents in the workplace now. The AI Wrangler will have to inspect why something went wrong and how to ensure it doesn’t happen again.

Schools Should Foster AI Wranglers

After I wrote almost this entire article, I was a bit disappointed to find I wasn’t the first person to label this job as “AI Wrangler.” It was out in the world already! So I wanted to add some relations to this future job and education.

One critique of education that I often hear is that we don’t train students for practical skills. This means there is a disconnect between what students are doing in class and the real-world workplace.

While I do think there is some truth to this to a degree, I also believe that the critique is overblown. Education cannot solely be focused on employment. The advent of the AI Wrangler job is a good example of this. It certainly did not exist when I was in college; it barely exists right now.

Our education system should focus on broad learning that can help people adapt to what is to come. Given that the AI Wrangler job requires aspects of STEM and humanities, a liberal arts education that offers a breadth of disciplines provides the skills needed to succeed in an environment dominated by this innovation.

This interdisciplinary approach is one reason why Netflix co-founder Reed Hastings gave a $50 million gift to Bowdoin College to establish the Hastings Initiative for AI and Humanity. Bowdoin is a small liberal arts college that excels in the exact kind of humanistic education an AI Wrangler will need.

Hastings lauded the value of this philosophy in an interview:

I would say a liberal arts education prepares you to be curious about the world, and trying to understand what’s a good life and what’s a good society. And that that’s very powerful and helpful in whatever you do, whether that’s government service, whether that’s teaching, whether that’s in business. And learning some specific set of skills is not that important. And even business changes so much. The businesses that were important when I left Bowdoin in ’83 are completely different than today.

This means that we must not simply train students on how to use certain AI models or other technology, but rather teach them to think and learn. Echoing Huang’s thoughts on learning from the introduction, it is not about coding or computer science per se, the future is about asking the right questions.

Not every student will grow up to be an AI Wrangler, just like not every student becomes a tech guy today. But just as basic computer competency has become a requirement to navigate the modern world, so too will aspects of the AI Wrangler skillset.

Instilling success means that schools must continue teaching both technological and humanistic approaches. It will be through this duality that we foster the AI Wrangler job of the future.